This post is taken from the lightening talk I gave at AMRO

Abstract

I have decided to try to solve a problem that I’m sure we’ve all had – it’s very difficult to play a tuba and program a computer at the same time. A tuba can be played one-handed but the form factor makes typing difficult. Of course, it’s also possible to make a tuba into an augmented instrument, but most players can only really cope with two sensors and it’s hard to attach them without changing the acoustics of the instrument.

The solution to this classic conundrum is to unplug the keyboard and ditch the sensors. Use the tuba itself to input code.

Languages

Constructed languages are human languages that were intentionally invented rather than developing via the normal evolutionary processes. One of the most famous constructed languages is Esperanto, but modern Hebrew is also a conlang. One of the early European conlangs is Solresol, invented in 1827 by François Sudre. This is a “whistling language” in that it’s syllables are all musical pitches. They can be expressed as notes, numbers or via solfèdge.

The “universal languages” of the 19th century were invented to allow different people to speak to each other, but previously to that some philosophers also invented languages to try to remove ambiguity from human speech. These attempts were not successful, but in the 20th century, the need to invent unambiguous language re-emerged in computer languages. Programming languages are based off of human languages. This is most commonly English, although many exceptions exist, including Algol which was always multilingual.

Domifare

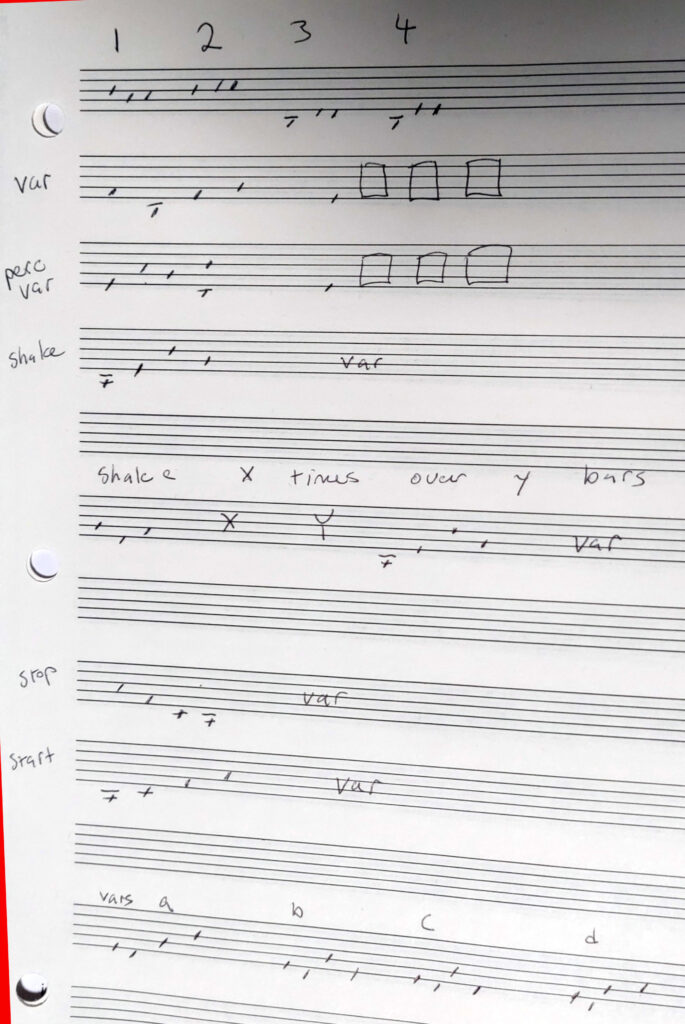

I decided to build a programming language out of Solresol, as it’s already highly systematised and has an existing vocabulary I can use. This language, Domifare is a live coding language very strongly influenced by ixi lang, which is also written in SuperCollider. Statements are entered by playing tuba into a microphone. These can create and modify objects, all of which are loops.

Creating an object causes the interpreter to start recording immediately. The recording starts to play back as a loop as soon as the recording is complete. Loops can be started, stopped or “shaken”. The loop object contains a list of note onsets, so when it’s shaken, the notes played are re-ordered randomly. A future version may use the onsets to play synthesised drum sounds for percussion loops.

Pitch Detection

Entering code relies on pitch tracking. This is a notoriously error-prone process. Human voices and brass instruments are especially difficult to track because of the overtone content. That is to say, these sounds are extremely rich and have resonances that can confuse pitch trackers. This is especially complicated for the tuba in the low register because the overtones may be significantly louder than the fundamental frequency. This instrument design is useful for human listeners. Our brains can hear the higher frequencies in the sound and use them to identify the fundamental sound even if it’s absent because it’s obscured by another sound. For example, if a loud train partially obscures a cello sound, a listener can still tell what note was played. This also works if the fundamental frequency is lower than humans can physically hear! There are tubists who can play notes below the range of human hearing, but which people perceive through the overtones! This is fantastic for people, but somewhat challenging for most pitch detection algorithms.

I included two pitch detection algorithms, one of which is a time based system I’ve blogged about previously and the other is one built into SuperCollider using a technique called autocorrelation. Much to my surprise, the autocorrelation was the more reliable, although it still makes mistakes the majority of the time.

Other possibilities for pitch detection might include tightly tuned bandpass filters. This is the technique used by David Behrman for his piece On the Other Ocean, and was suggested by my dad (who I’ve recently learned built electronic musical instruments in 1960s or 70s!!) Experimentation is required to see if this would work.

AI

Another possible technique likely to be more reliable is AI. I anticipate this could potentially correctly identify commands more often than not, which would substantially change the experience of performance. Experimentation is needed to see if this would improve the piece or not. Use of this technique would also require pre-training variable names, so a player would have to draw on a set of pre-existing names rather than deciding names on the fly. However, in performance, I’ve had a hard time deciding on variable names on-the-fly anyway and have ended up with random strings.

Learning to play this piece already involves a neural learning process, but a physical one in my brain, as I practice and internalise the methods of the DomifareLoop class. It’s already a good idea for me to pre-decide some variable names and practice them so I have them ready. My current experience of performance is that I’m surprised when a command is recognised and play something weird for the variable name and am caught unawares again when the loop begins immediately recording. I think this experience would be improved for the performer and the listener with more preparation.

Performance Practice

The theme for AMRO, where this piece premiered was “debug”, so I included both pitch detection algorithms and left space to switch between them and adjust parameters instead of launching with the optimal setup. The performance was in Stadtwerkstadt, which is a clubby space and this nuance didn’t seem to come across. It would probably not be compelling for most audiences.

Audience feedback was entirely positive but this is a very friendly crowd, so negative feedback would not be within the community norms. Constructive criticism also may not be offered.

My plan for this piece is to perform it several more times and then record it as part of an album tentatively titled “Laptop and Tuba” which would come out in 2023 on the Other Minds record label. If you would like to book me, please get in touch. I am hoping that there is a recording of the premiere.