About two weeks ago, the very interesting artist, Sophie Hoyle approached me to code a video cutter and graphics display using theta brain waves. This was for a performance at the new Science Gallery in conjunction with their exhibit on anxiety.

I’ve done a biometric video cutting app for Sophie before, so I said ok, despite the quick turnaround. This fast time frame was not their fault. The first brain wave reader they ordered was stopped by UK Customs and returned to the sender. So they then ordered a more consumer-focused brainwave reader and hired me once it arrived.

Emotiv

The Emotiv is a very Kickstarter, very Silicon Valley brain wave headset. It comes with an iPhone app or Android app which asks for an enormous and completely unnecessary amount of personal data, which is sent back to the company in the US, where they can mine it in various ways completely unrelated to product functionality. People buy this thing to use it while meditating, to monitor whether they are getting the right brain wave shapes. Some might argue that this is, perhaps, a misunderstanding of the goals and practices of meditation, but those people have not spent enough time in corporate offices in San Jose.

When it launched on kickstarter, there was a community SDK supporting all the various operating systems, including Linux. This was since dropped in favour of an API for windows and a separate one for Mac only. It requires an API key to get access to the some the data. You can rent the API key from them for a modest fee that makes sense if you think you’re going to sell a lot of apps. The art and educational markets are kind of neglected. Nevertheless, some intrepid soul worked backwards to decode the data and built a python library to access it.

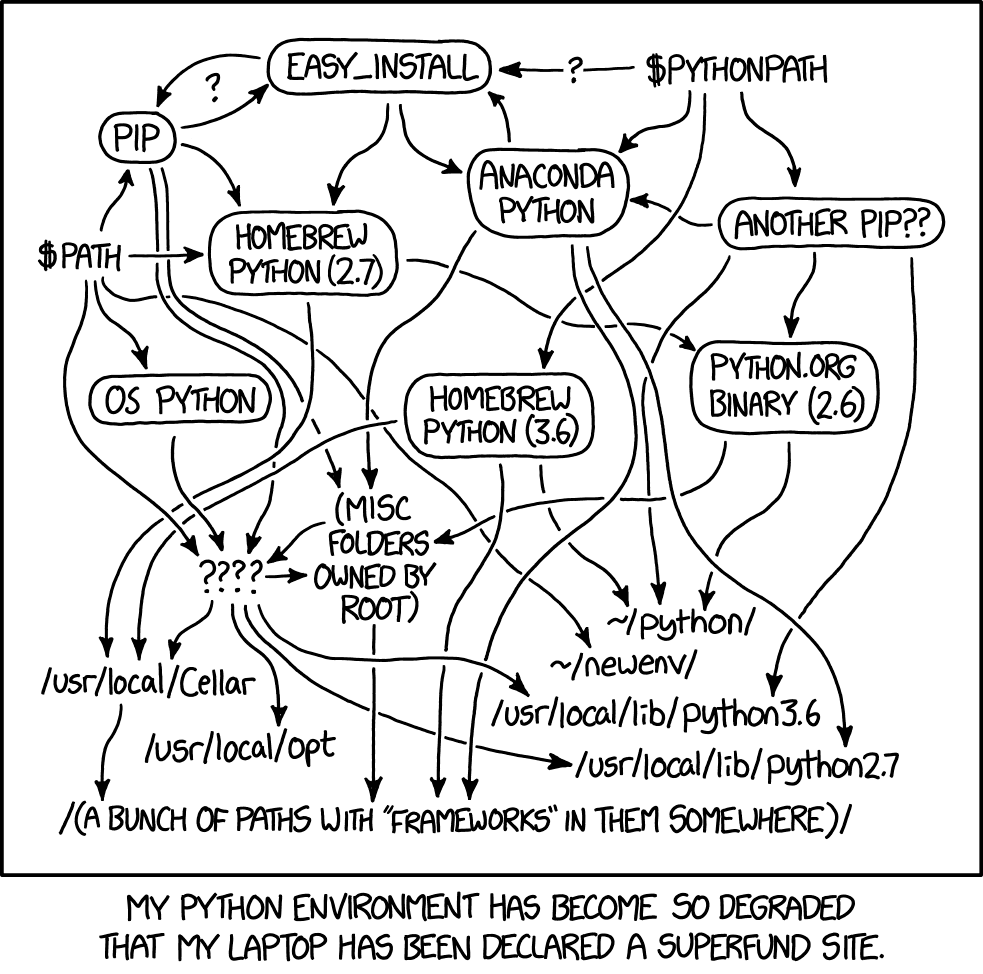

Python is really great to write, but the Python 2/3 divide has always confused me. Plus it’s very easy to accidentally get ten different versions of the same library on one’s computer. It took me an embarrassingly long time to get my python libraries under control.

Then I found that the reverse-engineered library was relying on a different version of the HID library. I started trying to work that out and ran into a wall. I started to try to update the emotiv library itself, but that way lies danger, so I quickly gave up and switched to a Mac I’d borrowed from my uni, so I could use the official API.

The Mac has a relatively new OS, but is 9 years old, so I had to download an old version of XCode. This is how I learned that old versions of XCode look like they’re available from Apple, but once you spend hours downloading them, something weird is going on with key authentication and they won’t unzip. I borrowed a laptop from Sophie and couldn’t get XCode on to it either.

There was always an option of doing things not-quite live where we could record a visualisation of the brainwave data, cut a video to that data and have live music based on the prepared video, so the music would react live to the brainwaves, but nothing else would. It’s sub-optimal, but time was running really short. I couldn’t get the emotive to pair successfully with either computer because it only works with extremely recent operating systems.

(The commercial world of software and operating systems is a hell-scape where people with more money than you try to prevent you from being able to use expensive things which you’ve already purchased. I cannot believe what people put up with. Come to linux. We want you to be able to actually use your stuff!)

I gave the device back to Sophie with intense apologies, saying I’d had no luck and they’d be better off doing their own recording and cutting the video to it, because there was nothing I could do. It was about 3 days before the performance. this was not good. I felt terrible. They were in a panic. Things were bad.

They found a computer with a new operating system and also could not get the device to work. They gave it to the sponsoring organisation who had purchased it and it didn’t work for them either. It was a £500, over engineered, under functional, insufficiently tested, piece of kick starter shit.

The Emotiv gets 0 stars out of every star in the galaxy.

Bitalino

About 2 days before the performance, they told me they still the bitalino from the last project of theirs that I worked on and would that work? I said it would, so, with less than 48 hours to go, I went and picked up the device.

Alas, I did not write down how to get it to pair from previously, so I spent a lot of time just trying to connect to it. Then I had to figure out how to draw a graph. The Gallery, meanwhile was freaking out. All I had was a white screen with a scrolling graph made up of extremely fake data that didn’t even look convincing. They wanted me to come in and show what I had. Nothing was working. I showed up an hour late and everything looked like shit.

Then, while I was there, I figured out how to get the bloody thing to connect. “That’s real data!” I shouted, pointing at the projection, jumping up and down. There were 24 hours left and I had 1.5 hours of teaching to do the next morning.

I went home and wrote a quick bandpass filter with OSC callback in SuperCollider. The Processing program would start a SuperCollider synth and reset an input value whenever it read data from the device. The synth would write that to a control bus. A separate synth read from the control bus and did a band pass filter on it. Theta waves are about 4 Hz, so hopefully this is good enough. I did an RMS of the filter output to figure out how much theta was going on. Then sclang sent OSC messages back to processing telling it the state of the amplitudes of the bank of bandpass filters.

In case the bitalino crapped out (spoiler: it didn’t), I wrote an emergency brown noise generator and used it for the analysis. Both looked great.

The I went to work on the video switching. It was working brilliantly, but at the size of a postage stamp. I got it to full screen and it stopped working. It was time for the tech check.

I went to the gallery, still unsure what was going on with the video switching. I finally discovered that Processing won’t grab images form the video file when Jack is running. SuperCollider relies on Jack to run it’s server even if, as in this case, it’s not producing any output audio.

I quickly set out to write a bandpass filter. I got a biquad programmed and got the right settings for it when I realised I didn’t know how to make brown noise to compensate for possible data dropout. I decided to declare a code freeze, rely on the SuperCollider and miss out on the video cutting and use a prepared video.

I started working out a protocol to ensure I could always get a good bluetooth connection, which worked.

All of the now-working thing is on github, with some better documentation.

The Sound Check & Performance

The Sound Check went brilliantly, but they played for the full duration until just before the show was to start. I was worried about having time to reset the Bluetooth, but it was ok and I didn’t need to reset everything. Then Sophie went to the loo whilst still wearing the bitalino. The connection dropped.

The crowd was at the door. I rebooted everything and it connected, but the screens got reversed in processing, so it opened the waves in the wrong window. I rebooted again and it was wrong again! I dragged the window around to be full screen on the projector, but it was blank. However, if I put Gnome into that mode where all the windows are made small so you can look at them and fine the one you want, then you could see the graph. So the whole thing ran in that mode. The rendering was impacted, but the idea got across.

Aftermath

The sponsoring organisation and the gallery were both very happy. It was the most attended performance to date since they got the space. The audience seemed happy and I overheard some positive comment.

I hadn’t slept more than a few hours a night for well over a week as a desperately tried to get this thing to work, so I was quite grumpy afterwards. I went home and slept for like 11 hours. I feel like a new man.

Lessons Learned

I didn’t know the Emotiv wasn’t properly supported because I didn’t properly read the support documents before accepting the gig. If I’d done adequate research before accepting, I would have known that I couldn’t deliver the original plan. Also, I should have noticed that their software store of third party apps has no content. That was a massive warning sign that I missed.

I didn’t know the actual Emotiv they got was broken because I always assume every problem is a software problem on my computer. This is often correct, but not always. It should have been a bigger red flag when it wouldn’t work with my android (which also often had software issues). I should have tested it with an iphone immediately straight after it failed to work with my android. I could have known within a few hours that it was fucked, but I carried on trying to figure out python issues.

Ideally, I’d like to get involved in future projects at an earlier stage – like around the time that equipment is being debated and purchased. I understand exactly why curators and artists don’t hire code monkeys until after they’ve got the parts, but knowing more about the plans earlier on could have lead to the purchase of a different item or just using the bitalino from the start.