Recently, I needed to record my screen and audio while SuperCollidering on Ubuntu Studio. (This will work on other operating systems also also with some tweaks.) This is the code I included:

s.waitForBoot({

("ffmpeg -f jack -ac 2 -i ffmpeg -f x11grab -r 30 -s $(xwininfo -root | grep 'geometry' | awk '{print $2;}') -i :0.0 -acodec pcm_s16le -vcodec libx264 -vpre lossless_ultrafast -threads 0" +

"/home/celesteh/Documents/" ++ Date.getDate.bootSeconds ++ ".mkv"

).runInTerminal;

....

})

As far as I am able to determine, that allows you to record stereo audio from jack and the screen content of your primary screen. Obviously the syntax is slightly dense.

I was unable to figure out how to tell it to automatically get the jack output I wanted, so in order to run this, I first started JACK Control, used that to start jack, and then evaluated my SC code, which included the above line. That opened a terminal window.

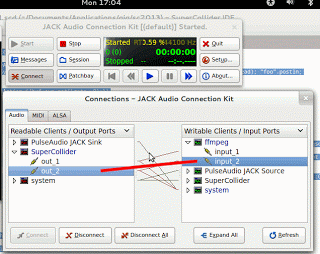

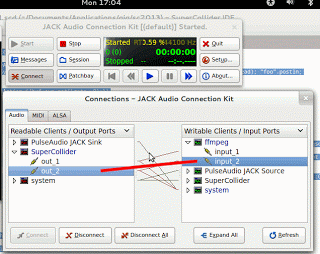

Then I went back to JACK Control and clicked the Connect button to open a window with a list of connections. I took the supercollider output connections and dragged them to the ffmpeg input connections, which enabled the sound. This step is very important, as otherwise the recording will be silent. The dragging is illustrated in the accompanying image by the thick, red line.

Then AFTER making the audio connection, I started making sound with SuperCollider, which was then recorded. When I finished, I went to the terminal window opened by supercollider and typed control-c to stop the recording. Then, I quit the SC server and JACK.

After a few moments, the ffmpeg process finished quitting. I could then watch the video and hear sound via VLC (on my system, VLC does not play audio if jack is running, so check that if your film is silent). I used OpenShot to cut off the beginning part, which included a recording of me doing the jack connections.

The recommended way of recording desktop output on ubuntu studio is recordmydesktop, but there are many advantages to doing it with ffmpeg instead. There is a bug in recordmydesktop which means it won’t accept jack input, so you have to record the video and audio separately and splice them together. (If you chose to do this, note you need to quit jack before recordmydesktop will generate your output file). Also, I found that recordmydesktop took up a lot of processing power and the recording was too glitchy to actually use. The command line for it is a LOT easier, though, so pick your preference.

You need not include the command to start ffmpeg in your SuperCollider code. Instead of using the runInTerminal message, you can just run it in a terminal. As above, make sure you start JACK before you start this code and don’t forget to make the connections between it and ffmpeg. I prefer to put it in the code because then I don’t forget to run it, but this is a matter entirely for personal preference.

Cross-platform

Almost all of the programs linked above are cross-platform, so this is very likely to work on mac or windows with only a few changes. Mac users have two ways to proceed. One is to use jack with QuickTime. You can use jack to route audio on your system so the SuperCollider output goes to the quicktime input. Or, if you’re in windows or just want to use ffmpeg, you will need to change the command line so that it gets the screen geometry in a different way and so that it captures from a different device. Check the man pages for ffmpeg and for avconv, which comes with it. Users on the #ffmpeg channel on freenode can also help. Leave a comment if you’ve figured it out.