In my previous post, I described how to install and start the SuperCollider Moo. In this post, I’m going to talk about how to use and move around the Moo.

Note this is an early version and it’s my intention to gradually make the SC Moo align more closely with LambdaMOO commands and syntax. Note also that the database is static. Your changes will be visible to yourself and others while you are logged in, but they will not be saved. If you make something you really like, please keep a local copy of it.

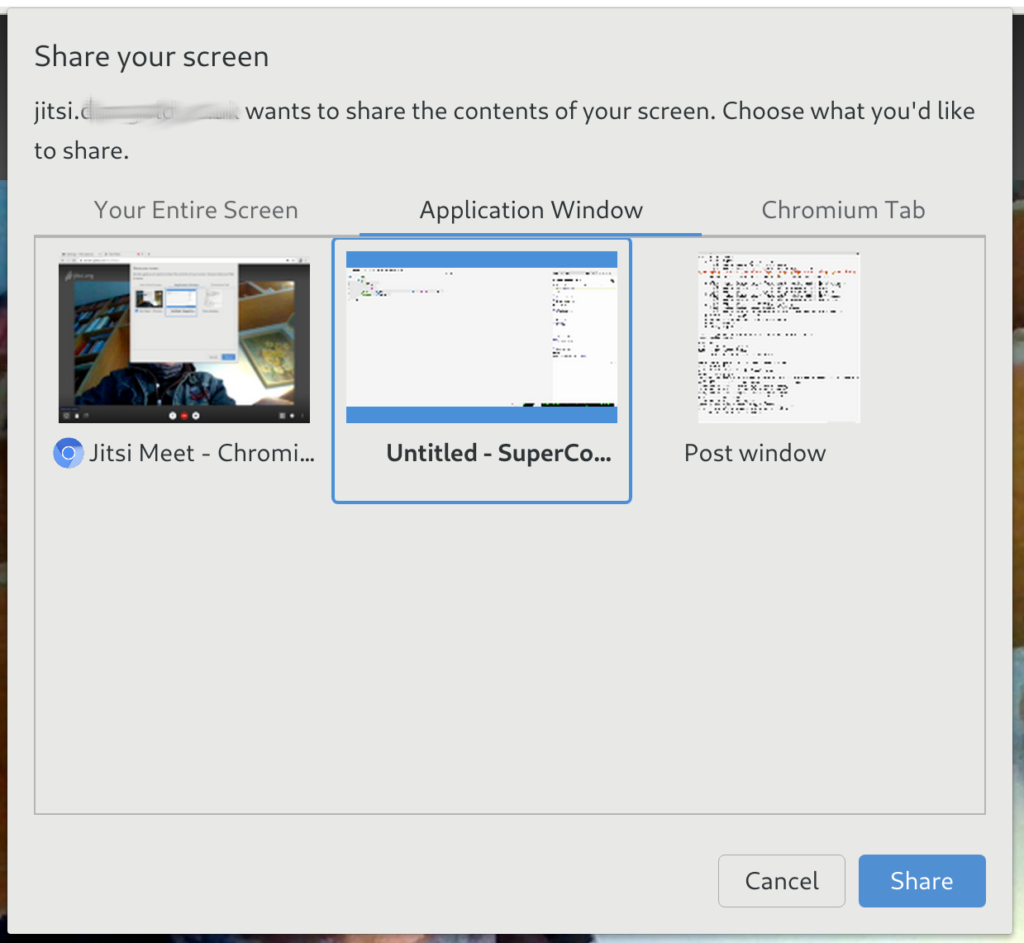

When you log in, you’ll see a lot of messages in the SC Post window, including loads of error messages. Just hold on until the GUI opens. This GUI will have a random colour, but it will have three text areas.

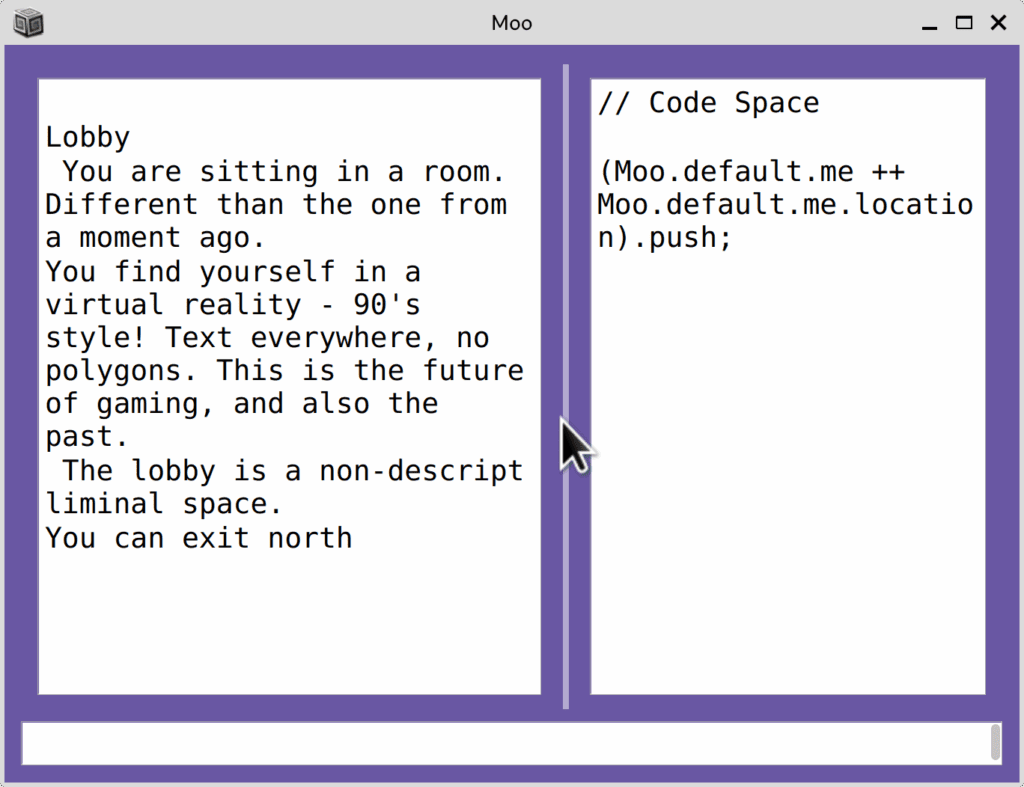

The left hand side is the Moo’s output. The right hand side and bottom are both input. The right hand side is designed to enable entering code. If you just want to use the Moo as a user, you can make that side smaller by clicking and dragging on the grey line that separates the two sides. Then just enter your Moo commands in the bottom text area, followed by enter or return to evaluate them.

When you log in, your character will connect to the lobby. You will see text describing the room. Below that, you will see a list of objects if any are present. Below that, a list of other users, if any are present. And finally, a list of exits. If there are not exits, it will say “There is no way out” because I thought that was funny.

You can look again at the room any time by tying “look” (without the quotes) and pressing enter in the bottom text area of the window. In the current iteration of the database, the lobby contains a flyer. To look at it, you can type “look flyer” and hit enter. To look at yourself, type “look me“.

The default message for what you look like isn’t very exciting, so you might take a moment to change it. Type ‘ describe me as "My description goes here." ‘ (without the single quotes). Put your description inside double quotes. After you change it, try look me again. This is what others will see when they look at your character.

You can move through the Moo by typing the names of the exits. From the lobby, you can type “north“. This will place you in the bar, which has several objects. You can look at all of them and some of them have additional verbs – that is they are interactive. To see the verbs on an object type “verbs ” followed by the name of the object. For example, “verbs cage” will tell you the verbs on the cage. From that, you’ll see that one of the verbs is climb, so try climb cage.

Some of the verbs have audio on them and some don’t. Some of the objects have attached sound and some don’t. Let’s look at the jukebox. The verbs on it aren’t promising. We can’t describe it because we’re not the owner and it doesn’t seem to have anything in about playing it. So let’s put our own audio on it.

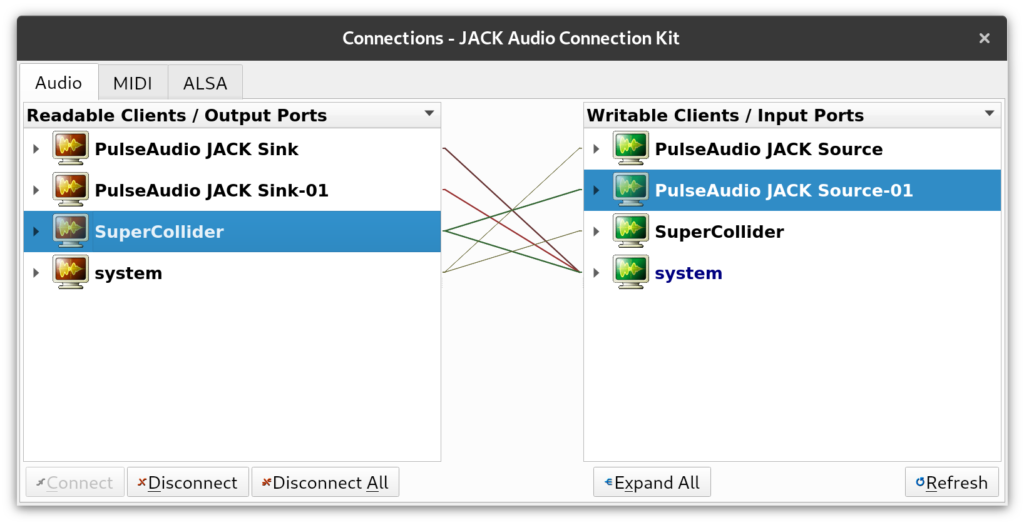

Go to the right hand side of GUI and evaluate the SuperCollider code (Moo.default.me ++ Moo.default.me.location).push; Now we can live code the jukebox, which has been added to the environment as ~jukebox.

We can set a pattern ~jukebox.pattern = Pbind(); and then play it ~jukebox.play; The resulting pattern is super boring, so why not modify it? ~jukebox.pattern = Pbind(\freq, 330); You can then live code it as you would with Pbinds or any other kind of pattern. You can set any SynthDef you’d like. As of now, all of these interventions are entirely local, alas, but networking them is coming.