One day, a little while into lockdown, I rang up my friend Shelly Knotts and suggested we reboot the Network Music Festival, which had last run 6 years earlier. “Leaving aside all the reasons this is a terrible idea,” I began and she revealed that she’d had the same thought. We were in.

She made a Gantt chart, a drawing that shows dependencies for scheduling, and said the soonest reasonable time to do it was roughly three months in future. So we set that date and set out. Our goals were to do an all-telematic festival with an open call, a few invited acts and no use of google. We were not to achieve all these goals, but we did put on a festival!

Shelly made a budget and asked me for some estimates. My first mistake was not taking that step seriously enough. We’d applied for funding previously and never gotten it, so I did not put in any pay for myself and I did not spend enough time looking at how much streaming would actually cost assuming we could just buy two months of a vimeo subscription. It turns out you can’t sign up for less than a year.

We already had a wordpress site with a minor malware problem, a google mailing list and a gmail account. I removed the malware and installed bunch of security software on wordpress, but didn’t have time to upgrade or replace the theme. I tried to setup a mailman list, but ran into some weird problem with the anti-spam features. And as a temporary measure, I set the new email address to direct to the old one. All of these things are still on my todo list.

To run the open call, I looked at all the conference management systems widely used. Like many of the formerly no-cost solutions I remembered from 2014, these charge money now. I wasn’t sure how much response we would get, but I was worried about capping it at the free tier. All of these systems are also designed for science conferences, not artistic events. I decided to use Ninja Forms.

To test Ninja Forms, I put up a default wordpress installation at a subdomain and put Ninja Forms on it. My mistake as that this was not a duplication of our existing site – it had many of the same plugins but not the same theme and certainly not the same content. It wasn’t a proper testing server. So as the open call was running, I got some email form people who were unable to submit their forms. I couldn’t submit them either – something about the content was screwing up and it wasn’t the post length or anything I could pin down. It worked fine on the testing site. We quickly recreated the forms on Google.

Meanwhile, rather than use Google sheets or their online text editor, we started using Cryptpad because Fossbox has an account there. Their spreadsheet glitched and lost a lot of data. We filed a bug report and they said they fixed the problem, but we did not return to them for spreadsheets. We did use their rich text documents, which work beautifully.

When it came time to combine all the data from the open call forms, I put both batches of data into CSVs and used Libre Office to create a giant set of sheets. I assigned entries IDs. By hand. This was a mistake. And broke them up into a bunch of sheets associated with submission types. I also looked for duplicates and removed them – sorting them by title and email to look for matches. I used a mail merge operation to product a bunch of html documents with anonymised submissions for our reviewers. Because I sorted them after assigning IDs, the names of the documents and the ID numbers didn’t match. Only one reviewer noticed.

Meanwhile, we’d been collecting potential reviewers into an excel sheet. We tried to have equal numbers of men and people who are not men (read: women and enbies, a group that otherwise should not be lumped in together). We found that men were way more likely to agree to do reviews and ended up asking about twice as many women. It’s extremely fortunate that I decided not to use the free tier of Easy Chair as we had more than double the number of submissions they allow. It was a real scramble trying to find reviewers. I returned to facebook and leaned on friends for recommendation.

I used the UNO bridge from libre office to python to email all the reviewers their agreed number of reviews. Each item was to get double reviewed, once by a man and once not by a man. Every item did get reviewed at least twice. A few got additional reviews. Reviews went to another google sheet.

Collating the submissions with the reviews is too much to reasonably to in a spreadsheet, so I imported all the submissions and all the review CSVs into sqlite3. I wrote some python scripts to spit out submissions coupled with reviews so we could use them to figure out what to accept and reject. These were sorted by score. We decided that everything with a score of 4 or 5 should probably be accepted and anything at a 2 or lower should probably be rejected. However, we did both look at every single one of the submissions and decide to take them or not, sometimes overriding a low score, but never a high score. We put our decisions into a spreadsheet and then had a meeting to discuss only our disagreements.

Like all our meetings, this was held on Jitsi Meet, one of the platforms we were considering for workshops. And, indeed, it was not just one meeting, but several. We started out imagining getting around 50 submissions and accepting around 25. We got 164 and accepted 101.

After we came to an agreement about who to programme, I spit out sheets with just the reviews and emailed them to all the submitters. It was here that an error came to light. I’d assigned the same ID to two different groups and they both got back a mixture of reviews meant for both of them. Because I’d exported the submissions via a mail merge in libre office, it hadn’t cared about the duplicated ID. It made a file for every single row of the spreadsheet. So everything had been reviewed, but the reviews had been jumbled up. We got an email from one of the groups saying they thought they’d received the wrong reviews. I double checked everything then and this only happened to one group.

Once we knew who we were accepting, we asked accepted groups to verify if they would perform and to use a free web meeting calendar to track their availability. We also asked them if they want to correct any of their information. I wrote another python script to copy everyone who accepts into a new sqlite3 database, and replacing their information where they offered corrections. Only a tiny number of groups wrote “no” in those forms, so it worked mostly without a hitch.

I wrote yet another script to upload every database record to our website. While I was doing that and uploading the late responders, Shelly was proofreading everyone’s programme notes and biographies. We shared the database on Dropbox. My internet connection is quite slow and big databases are big and also not text, so automated merging of file changes went catastrophically wrong. Shelly lost most of her edits. We moved our database back to google sheets.

It was then I found out that the meeting-scheduling website would only export a CSV if you bought a year long subscription. I signed up for an account and tried to get the calendar to associate with my new account and it wouldn’t, so at least I was spared the expense. I used google sheets to create a date field incrementing hour by hour for the full duration. I cut and pasted this list into a plain text document and followed every line with a comma. As I moused over every single separate square of the 100 and some possible festival hours, a little popup appeared with a list of everyone who said they were available at that time. I copied and pasted these lines into the text document. This gave me a bunch of lines starting with a date and time, followed by a bunch of identifiers.

I uploaded my new file as a CSV back to google sheets. Each row started with a time and the subsequent cells identified which groups were available. It was a perfect triangle wave of availability. Shelly had made a draft programme and it did not correspond to the listed availabilities. Worse, many of the groups had put their names in the form rather than the submission ID number, so I had to do a lot of database searching to figure out who “Joe Bloggs” was and eventually resolve everyone to an ID.

Then, once I knew who was who, it looked NP complete to figure out how and when to programme them. I found the least available bands and made graphs of when they could play. I started looking into what algorithms might cope with this completely intractable problem. It was then that Shelly put her foot down and demanded she take charge of this creative, curatorial problem. She came back the next day with a working schedule. I still have no idea how she did it.

Our old paypal account still existed and I started seeing notices of donations. But what was the password? The bank account it was linked to was long dead, but an old email with the account information contained enough detail that paypal let me back into the account. Thank goodness.

Around then, I went virtually to the Art Meets Radical Openness conference, which went extremely well, so I decided we should copy them as much as possible. They used BigBlueButton, which I liked, so we began looking for a BigBlueButton host. Fo.am runs a server, so we asked them and because life is uncertain, I also asked a bunch of other people, two of whom agreed. One was senf call, which was great, but in German and the other was nixnet in North America. One thing we didn’t know was how many people could be in a single BBB meeting, so I read the FAQ for the software and it said it should be stress tested by getting some volunteers to all open five browser tabs to the service. I rounded up some friends and found definitively that if anyone opens five browser tabs to BigBlueButton, their laptop will crash. Later, our North American host laughed and said he regularly has groups of 100 people!

AMRO had backchannel chat in Matrix, so we did as well. They ran their own server, which it made it work way better than using the regular Matrix server. The invite link for that service leads to what looks exactly like an error page. I ended up putting a screenshot of that page on our website with an arrow showing where to click.

AMRO had a stream out via DorfTV and an audio-only stream via a radio station, so we decided to try to have a produced stream and an audio stream. The audio-only component was especially important to me, since my home internet quality is variable and I ended up replying on the audio stream to be able to listen to concerts. I’ll talk more about how we did the AV in another post.

AMRO had a website for the conference that had large portions that were just flat files. No caching. No overhead. Fast to load. No trackers. Holger Ballweg, Shelly’s partner, built a flat site for us that had a video player, a link to the audio player, a chat widget and a scrolling schedule that changed times based on your submitted time zone and told you what was coming when. He also made a bunch of pages for our exhibition of web installations.

I was trying to get something similar on the wordpress site, but the calendar plugins did not do what I wanted. We finally ended up making a bunch of Event Bright events and told people to use those to get their time zone. Those events also export in very few clicks to facebook, so we put up a a facebook event for every concert and workshop.

I exported all those events to a google calendar and then used IFFT to post about the events as they started to our official twitter account. I hope this drew people over. Most of our promotion of the open call and the festival was twitter and facebook. We also had a presence on mastodon, which cross-posed to my personal twitter account. I’d never deleted it, so I also turned Cheap Bots Done Quick on to it with some sincere left wing content and a bunch of posts about the festival. Shelly also wrote a press release and subsequently we were included in some concert listings as well as the Other Minds mailings, which certainly helped.

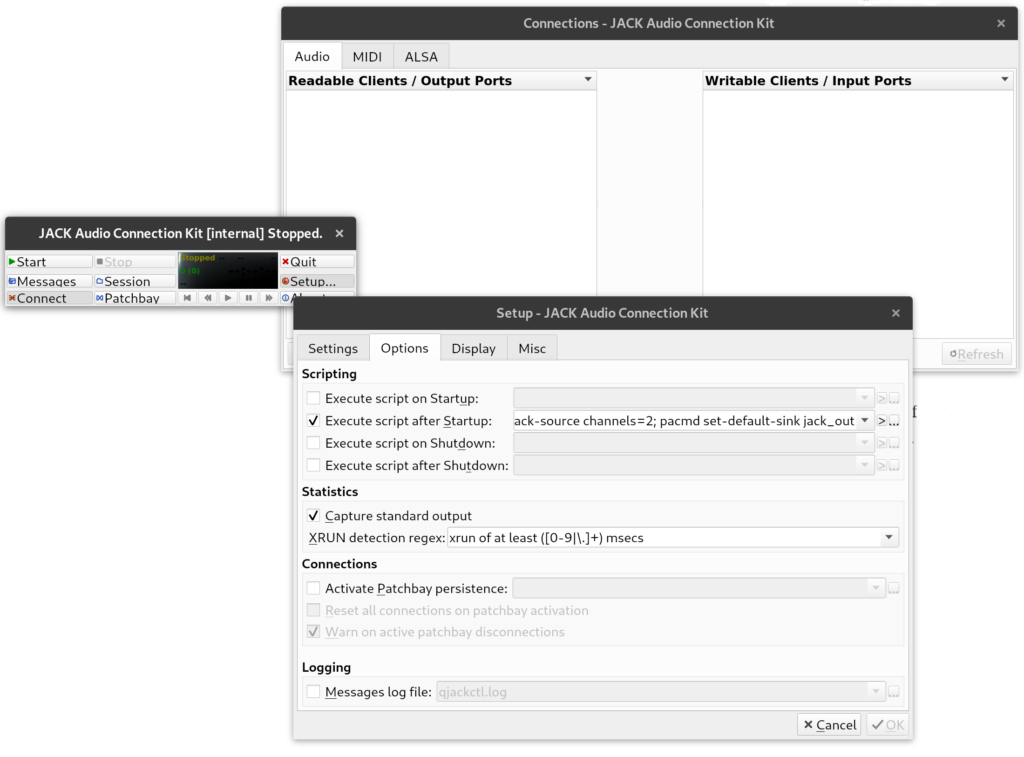

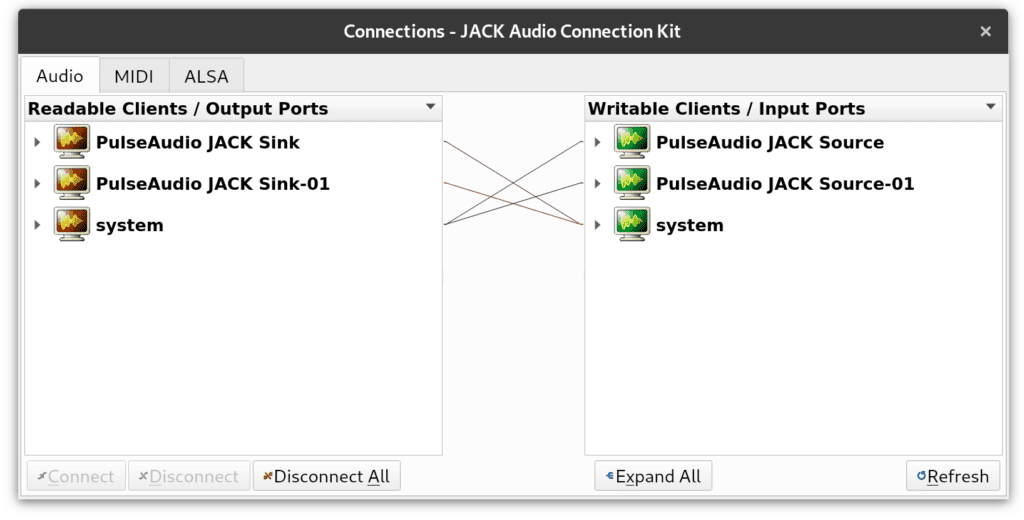

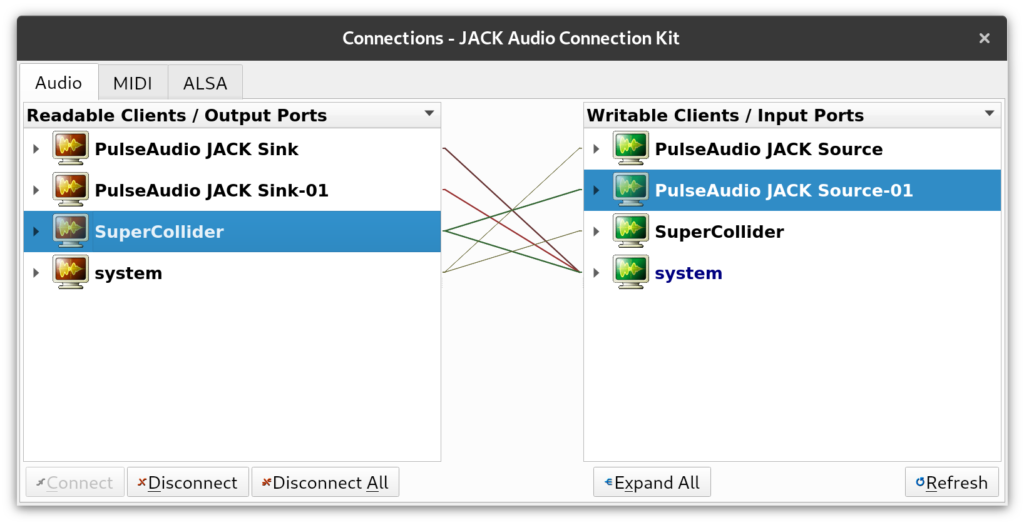

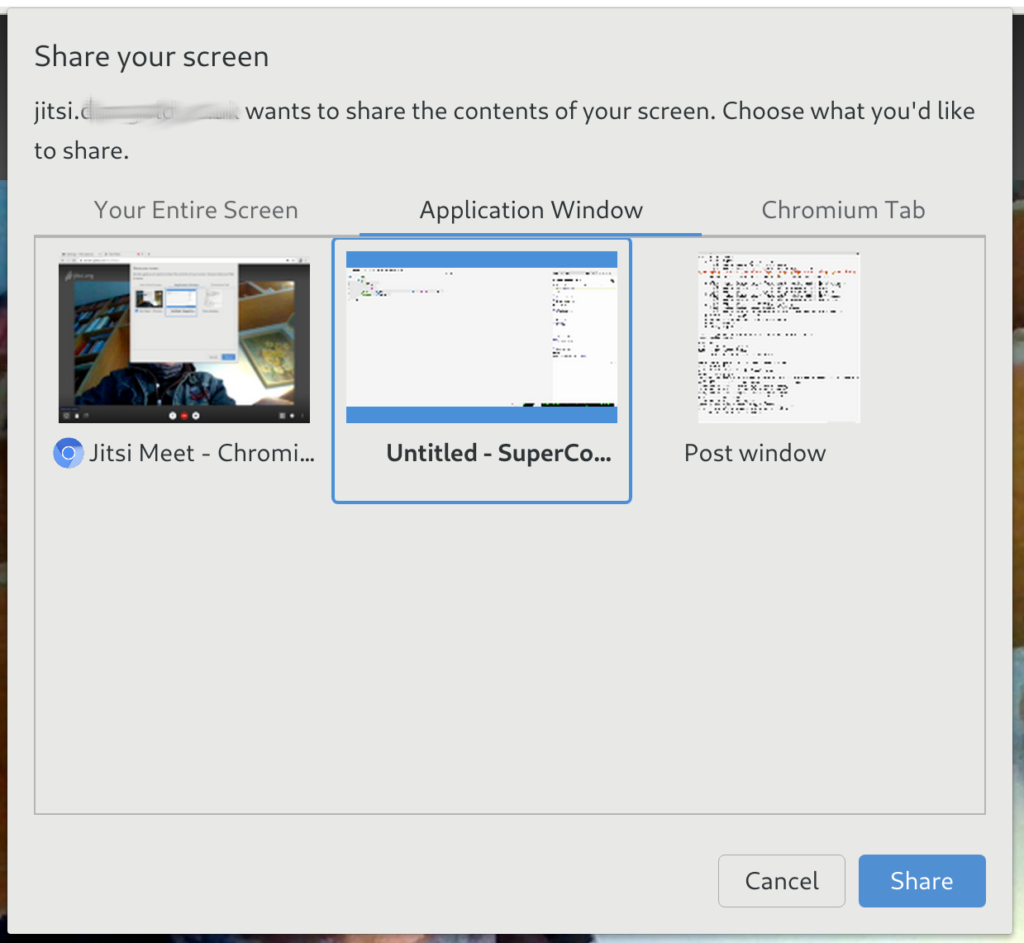

As the festival drew ever closer, my attention turned to the streaming process and also providing tech support to people inexperienced with streaming. We had “new to networking” categories and “student” categories and hoped to help people get into telematic performance who might not have done it before. After a long Jitsi meeting with someone new to networking, I wrote the conversation up in a blog post for fossbox. I learned how to do weird things with system audio in BigBlueButton.

I also started trying to harden the WordPress site against heavy traffic with SuperCache. This says it can’t cache the mobile site without Jetpack, so I installed the Jetpack plugin to find it has spyware in it. I set about trying to disable it, something their privacy policy notes the site owner can do. Their privacy policy doesn’t note that it requires either patching the source code or installing yet another plugin to patch the source code. Otherwise, it merrily ignores Do Not Track settings, sharing the data with their parent company. Alas, all of WordPress seems to be this kind of dodgy grift these days.

In the end, we presented 97 groups. We raised more than £1000 in donations. These are meant to go to the independent artists in the festival, of which I don’t yet have a count – they’ve got yet another form to fill out. I don’t know how many people passed on stage and backstage but it was a lot. We streamed roughly two terabytes of festival.

My todo list now is: Get away from wordpress to a lower-carbon system such as Hugo. Find and deploy a database with a Google-sheets-like interface, so I can do SQL while Shelly edits. Get rid of Google forever for real.

We’re planning on repeating the festival next year, so we really should start getting on funding applications. I felt a real sense of camaraderie in the VR algoraves, so it would be nice if the entire festival was in VR next time. Mozilla hubs is still kind of buggy, but hopefully it will be better by next year. Of course, Second Life supports audio streams, so there’s always a dual option for the overly old school among us.

It’s been a week and a half since the festival ended and I still feel slightly tired out after it. This post mostly only covers what I did for the festival but not Shelly. It would be more than twice as long if it included all of her intense work. There will be forthcoming a more how-to post on running the data admin. I’m not sure anyone would want to copy us, but it did work and I think a documented process is valuable. I’d like to see an AGPL solution that can be customisable for the arts. And, as promised, I’ll talk in detail about how we managed the streaming, as that ended up working really well.

If you want to make a donation, all of the money raised via paypal before 10 August will go to our independent artists.